![DROPS Architecture (from [BBH+98])](img1.png)

|

on the topic of

Generic Porting of Linux Device Drivers to the DROPS Architecture Christian Helmuth

Tu Dresden

Computer Science Faculty Member

Institute for Architecture

Professor of Operating Systems

July 31, 2001

| Independence Assertion I hereby declare that I completed this work independently using only certified special aids.

Dresden, July 31, 2001

Christian Helmuth |

Modern computer systems are characterized by the ability to fulfill a diverse set of requests within many different areas. A principal reason for this is the ability to use a non-standard selection of system components. Purchase price is a factor here, which is why many manufacturers offere different solutions.

The high variance in hardware means that new control software must be developed for each one. These device drivers are often provided by the manufacturer, but are operating system specific and rearely come with the source code.

For research systems like the microkernel-based Dresden Real-Time Operating System , no device drivers are provided by the manufacturer. They are, however, necessary in order to be able to use devices that vary highly from standard hardware such as a processor, DMA (direct memory access) controller, or PIC. The independent development of drivers for each device is not feasible for two reasons: 1. extensive specifications for the hardware, if available at all, are expensive and come with legal restrictions for use, and 2. development and testing of device drivers is very time-consuming.

A better solution is to port the device drivers from another operating system that runs on the target architecture with the requirement not to change the original driver if possible, in order to keep maintenance costs small. Work like [ Sta96 ] proved that it is possible to extract the device driver from an original monolithic system and integrate it unchanged into a microkernel-based architecture.

The next logical step to generalize this driver-specific process for (almost) all drivers from an operating system. On this topic, this work argues for and describes a generic process for porting device drivers from the monolithic Linux kernel to the microkernel-based DROPS.

This work is arranged into five sections which represent the development process of this undertaking as well as conclusions on the design and implementation, including future extensions and applications.

Chapter 2 comes after a brief explanation of the DROPS - project, with general discussion on the role of device drivers within an operating system and an examination of Linux [ T$^+$01 ]. Related projects are briefly discussed as well.

In the next section, a suitable architecture for device drivers under DROPS is sketched out. The function and structure of the components involved is also described, with special attention given to the DROPS environment and the Common L4 Environment with regards to their specific requirements.

Chapter 4 describes the general implementation of the described components and provides an example with source code. A performance evaluation of the new device drivers as compared to the original system follows.

Summary and conclusions appear in chapter 6. There is also an update on the current status of the implementation. Further speculaion on possible extensions follows.

I would like to cordially thank everyone who supported me during the completion of this work, particularly my mentors Prof. Hermann Haertig and Lars Reuther. I would like to also thank my friends in Schlueterstrasse and elsewhere.

The TU Dresden Operating Systems Group concentrates on research in the realm of multi-server atchitectures and applications with QoS support requirements. The Dresden Real-time Operating System (DROPS) uses the DROPS microkernel, a second gerneration implemetation.

The DROPS - Architecture differs from traditional monolithic operating systems due to its microkernel based design. The main difference is in the placement of device drivers.

For DROPS the traditional approach is to not integrate the device driver into the kernel. Being a microkernel, it allows for the second approach in which each device driver is located within an external server which uses fast IPC for communications.

One should not completely reject the possibility of colocation of device drivers within DROPS, as it possible to run them as a program module within the address spaces of an application. For devices with only one client, i.e. network protocol stacks, console drivers or video drivers, it is possible to configure colocated drivers and thus improve application performance.

Thus, device drivers under DROPS should be written as independent modules with a specified interface, which depending on the required configuration, can be used over IPC or through process colocation.

The operating system provides an abstraction of the actual hardware of a computer system by providing a virtual machine with a defined interface. An example of such an interface definition is the POSIX standard.

The functions which the operating system has to provide include:

For porting device drivers, points 2 and 5 are most interesting. This is where the operating system defines how it handles hardware resources and input and output support. In addition to these points, on must not neglect the influence of flow control with regards to device drivers.

I will present these topics in greater detail, but we must begin with a specification for a device driver software.

The Taschbuch der Informatik defines driver software in such a way ([ W+$95 ] P. 295):

The device control routines (device drivers) take over the input or output of a device-specific entity, e.g. a character or a block. They consider the log determined by the device control (e.g. output of commands, query and analysis of the device status, handling of ready messages, transfer or receiving of utilizable data).

This means that device drivers represent a software layer between applications or kerenl components (e.g. network protocol stack) and the real device. An integral part of the operating system, they are closely connected to it and normally adapted to the particular system environment.

An important characteristic of device drivers is their protective function. It should not be possible to damage or destroy a device through the use of a driver.

The device driver handles user program requests ( system call ) and hardware events ( interrupts). For this reason, driver functionality can be split into roughly two -- process and interrupt level -- partitions (fig. 2,5 ). The two layers share common data, and thus must be synchronized during accesses to the shared area.

The way synchronizaion is implemented is system dependent. Frequently solutions are based on queues, locks and diabling of interrupts.. Locking via the disabling of interrupts is the simplest method for synchronization.

For multi-processor operating systems, other solutions must be discovered. The serialization of system calls, so that only one is active at any one point in time (Linux 2.0) is one method for synchronizing activities on the process level. Another is to implement the kerenl as a multithreaded process (Solaris, Linux 2.4). Intelligent blocking is critical here, since several process activities interrupts on different processors can run simultaneously.

Now if the same driver is to be used in different environments, it must be considered that certain kernel functionalities may not exist or may not work as expected.

The devices in a computer system can be arranged into two categories according to their structure and the access patterns.

This classification scheme is not perfect, some devices exist that are not so easy to categorize. For example, clocks do not provide a character stream and have no addressable blocks. Despite this weakness a rough organization into block and character devices has become generally accepted in many operating systems.

Further partitioning of these classes is usually system specific (see paragraph 2.5.1 ). A device driver for a DSP (sound card) -- although also a character device -- can require a far more extensive kerenl environment than a serial interface.

It is here that subsystems play a large role. Drivers for SCSI devices, for excample, use many features from the SCSI subsystem, which administers queues and performs synchronization. An IDE driver, however, would require a slimmer environment, which is easy to see due to the fact that devices are not as varied as SCSI devices.

The address of a device (at least in UNIX systems) consists of several parts. These are the device type (char or block), the kind of driver (major number) and the unit number (minor number).

An important aspect of the kernel environment is the threading model, i.e. the semantics of the synchronization and scheduling in the operating system. It describes when and if a thread can block, and what happens to it if it does.

Such a design is really only useful in systems which want to avoid blocking in the kernel and have a require to always completel system calls. An example is the Exokernel.

A second (and more frequent) possibility is to a kernel stack for each process. If a process blocks in the kernel, its state is preserved on its dedicated kernel stack. The process can continue without problems by using the information stored on its kernel stack. Some blocking operating systems are e.g. Windows NT and most UNIX versions, including Linux.

I will go into more detail on operating systems which use the second model,

as they are the most common. If it is permitted for several processes to be

in ther kernel at the same time -- reentrance, the scheduling

must be considered and taken into account during the displacement (preemption)

of a process, i.e. the release of the CPU.

Preemptives Scheduling is particularly useful for real time systems, since higher priority processes can interrupt lower priority processes.

This is the traditional UNIX model, and with the exception of a few newer developments, the prevailing strategy.

I would like to point out that this refers to the scheduling of kernel activities. Other user activities are usually subject to a different scheduling strategy..

The system resources that are interesting in this discussion are those concerned with input and output, and are thus the interface to the hardware. Specifically, these are the I/O address ranges, DMA (direct memory access) channels and interrupts.

Since a large number of extension cards and devices can exist in a computer system, I/O resources must be intelligently administered. The devices must export registers in order to be able to communicate with the device drivers or the CPU. This is accomplished by inserting the registers into an area addressable by the CPU (Mapping). Which address area is used is dependent on the hardware architecture. In PC systems, there are two possible options: the I/O address (port) area and the memory address area (memory mapped I/O).

The operating system must ensure that no two device drivers use the same I/O area. If different device drivers should happen to request overlapping areas, either something is misconfigured, there has been an implementation error, or a genuine hardware conflict exists, because the allocation of addresses to device registers is unique.

There can be resources controlled in other ways -- DMA (direct memory access) channels for ISA devices. The DMA (direct memory access) controllers make 7 channels available 2,2 for direct transfer of data between primary storage and the ISA bus. Only one device can be assigned to each channel. This must be enforced by the operating system.

The control of I/O is the job of a device driver. General functionality is organized into subsystems, which use different drivers of the same driver class.

When considering I/O control, the subsystem must be taken into account along with the device driver. Such subsystems exist in many kernels for SCSI, IDE and sound devices. Additionally there can be subsystems for terminal devices , e.g. serial interface or keyboard.

The TU Dresden Operating Systems Group has done past projects which involved device drivers from the Linux environment, and this past work served as a basis for this work [Sta96 , Meh96 , Pau98]. Linux is highly suited to this type of work because its source code is freely available and it is widly used by research institutions.

For this work, the Linux kernel version 2.4 was selected as a reference. However, the Linux kernel has undergone three years of work since the previous efforts at Dresden, so conclusions from that work do not necessarily map 1:1 to the current 2.4 kernel version.

If one examines the source code of the Linux kernel. One will see that nearly 50 percent of it is in the drivers/ subdirectory which contains the code used to access the hardware. In addition to the device driver portion of the kernel, there are also global subsystmes such as the SCSI subsytem.

Fig. 2,6 shows the organization of the device drivers under Linux. The highest level is divided into the two previously discussed classes, block and char, as well as into networking devices. Since access to the latter only takes place indirectly via BSD sockets (and the network protocol stack), they are stored in their own group.

Block devices under Linux are very diverse in nature. SCSI block devices are supported as well are those that use the IDE interface. Additionaly, device drivers for proprietary hardware such as older CD-ROMS exist as well.

Character devices are divided tty, misc, and raw devices.. All devices in the first two groups are raw devices, but addional software layers are placed between the device drivers and the interface. The tty group has code for terminal devices, and the misc group is for hardware like the PS/2 mouse..

This partioning is not sufficient for determining the necessary subsytems. It is good for detecting a terminal driver that will not function without the terminal subsytem, consisting of the registry and line disciplines, but it will not detect that a raw a device like the SCSI Streamer needs the SCSI subsystem to function.

A uniform partitioning can be defined by answering both these questions:

The same subsytem can simultaneously support several device drivers that export different interfaces. The example mentioned above describes this case: the SCSI Streamer and a SCSI fixed disk use the same subsystem, however they export different interfaces.

The threading model of the Linux kernel is based on the blocking model, and activities in the kernel are not reentrant. Naturally occurring hardware events -- interrupts -- can cause an immediate preemption if they are not disabled.2.3.

In order to make the kernel more efficient within the server realm, great importance was attached to maximizing parallelism in multiprocessor systems, and thus the intelligent barriers mentioned in paragraph 2.2 were extensively used.

Synchronization in the Linux kernel is based upon a few basic building blocks and support functions. The basic building blocks are:

It is here that one sees one of the complexities caused by multiprocessor systems, since busy waiting on the release of a critical section on a processor is a unique deadlock in preemptive environments such as Linux.

Semaphores and spin locks are not recursive in Linux. Repeated requests for the same resource can thus lead to a deadlock.

If one uses these functionalities meaningfully and intelligently , a high degree of parallelism can be achieved. It is used to achieve the SMP profits of the network protocol stack.

From these basic blocks are built larger groups of functionality which influence the flow within the kernel. Several different scenarios are illustrated here using abstract data types and functions. It is important to note which level one considering:

When process context voluntarily releases the processor, the yield flag is set in its policy field and schedule() is called. Other process level activities can now utilize the CPU.

A process that remains ready using the above described procedure can also block before having a chance to call the schedule() function, thus leaving its control block unmodified. In [ BC01 ] S.75 it is stated:

Processes in a TASK_INTERRUPTIBLE or TASK_UNINTERRUPTIBLE state are subdivided into several classes, each of which corresponds to of a specific event. In this case, the process state does not provide enough information to restore the process quickly, so it is neccessary to introduce an additional lists of processes. These additional lists are called the wait queue .

A process context can thus wait on a certain event, and it is placed into a wait queue and schedule() is called.

The function sleep_on() is used for this purpose to make the described process transparent. I.e. when a call to this function returns, the entire cycle consisting of queueing, blocking, waking, and unqueueing has been accomplished. The process context is now in the ready state. Waking up takes place in the wake_up() function.

It does not matter whether a process or an interrupt context is woken, and both cases exist in the kernel. However, these functions are not used in every case. Some device drivers simulate the process independently (see paragraph 4,3 ).

Additionally, all threads on the interrupt level do not run at the same priority. So the interrupt handler can preempt certain activites of the CPU through specific interruptions. During the processing of an interrupt further interuptions are often disabled on that CPU 2,5, and this makes it necesarry to divide the code into three different goroups:

In the Linux kernel, the first two groups are grouped together under the term top half and are the actual registered interrupt handlers. . The last group falls under the term bottom half (BH), and the execution of that code takes place at a later point in time. Section 2.7 shows the structure of a Linux device driver, escribed in paragraph 2.2.

The actions of an interrupt handler are activated by a hardware interrupt which causes the top half to be run. The top half will schedule the bottom half for later execution.

As the Linux kernel was developed, these concepts became more refined. In addition to the old style bottom halves, newer deferred activities were created:

In the future, BHs are to be gradually replaced by tasklets. Softirqs, however important to kernel activities, are to remain reserved and are so far only planned for use in the TCP/IP stack.

The Linux kernel administers resources with the help of a very obvious and generally accepted concept -- arbitrary resource management. This is part of the architecture-independent section of the dernel and can easily be ported to each architecture the kernel runs on. PC systems administer two address areas using this kernel component -- I/O port and I/O memory (see paragraph 2.4.2).

The interface to the component consists of functions for requesting and releasing portions of the respective address areas. Another function is used for determining the availability of these resources.

In addition to this concept, the kernel uses a similar interface to administer ISA DMA channels and interrupts, allowing the latter to be used by several device drivers at the same time -- shared interrupts. In the case of shared interrupts, the devices are called in the same sequence they were registered with the interrupt handler 2.7 .

All device driver specific components are stored in the drivers/ subdirectory of the kernel sources. This includes the subsystems as well as the device drivers themselves (Fig. 2,8).

... drwxr rudder x 3 krishna krishna 4096 July 6 17:48 block/ drwxr rudder x 2 krishna krishna 4096 July 6 17:48 cdrom/ drwxr rudder x 9 krishna krishna 4096 July 6 17:48 char/ ... drwxr rudder x 13 krishna krishna 8192 July 6 17:48 net/ ... drwxr rudder x 4 krishna krishna 8192 July 6 17:48 scsi/ drwxr rudder x 3 krishna krishna 4096 July 6 17:48 sgi/ drwxr rudder x 5 krishna krishna 4096 July 6 17:48 sound/ ... |

Unfortunately, only network device drivers were considered in this work, and this causes the solution to be relatively specific as it was pointed out in [Sta96] that the Linux system uses specific driver interfaces for the different device classes and that general handling does not take place.

This driver code was later ported to L4 as well.

Later in his thesis (diploma), he used the results for the development of a verifiable SCSI subsystem for DROPS and created a port of the SCSI driver for L4, among other things.

Both works represent device class specific solutions from the Linux device driver

source code.

Unfortunately, functionality is limited to the device drivers included in the OSKit. Additionally, the implementation of the work is incomplete and is currently not yet available.

Device drivers under SawMill Linux are also (with restrictions) reusable components. Thus, this architecture is very similar to DROPS. Solutions for Linux char, block and net drivers exist to varying degrees.

The goal of this work is to design a framework for using Linux device drivers from within DROPS.. The device drivers used from their unmodified source. Additionally, it is the responsibility of the environment to avoide conflicts between different drivers as well as to administer the hardware resources.

During the design of such an environment many different aspects must be considered, such as synchronization, store management, common resources and interrupts. Other specifications for device driver environments, e.g. [ UDI99 ], are therefore very useful and contain detailed descriptions of the interfaces parts of their components..

A generalized process for the development of a device driver environments for microkernels such as DROPS will be described from gerneral observations up to and including specific examples.

In the rest of this document, I will abbreviate this environment as DDE -- Device Driver Environment.

As described in paragraph 2.1 it is the philosophy of DROPS to allow system components (and applications) to run as independent servers on top of the microkernel. Address area boundaries are thus situated between the device drivers and other DROPS servers, which means that a suitable kernel mechanism must be used for communication -- interprocess communication 3.1.

The DDE must provide all the functionality used by the device drivers in the original system. It is obvious that a complete emulation is not possible or meaningful. Therefore the DDE is limited to fundamental functions, without which the drivers would not function, and divides these into centralized and decentralizable.

This distinction makes it possible to place access to critical or global resources, which need to be synchronized, into a separate component -- centralize, which offers a suitable IPC interface.

|

I will now discuss the DDE components which provide the above mentioned functions.

Each operating system has a central component which administers hardware resources in order to avoid access conflicts. As can be seen in Tab. 3.1, programming of the interrupt controller and the PCI bus also needs to be centrally controlled.

If one allowed device drivers to have arbitrary access to these resources, conflicts would occur since manu operations are not atomicly feasible (thus inherent nonserializable) and require several steps to accomplish.

An example of this is PCI bus configuration. Here, two 32-bit ports -- address and data port -- are used successively: 1. Device registers address to use by writing to an address port and 2. Configuration registers read/write by a read/write operation on the data port.

In the DROPS DDE the described component is the I/O Server:

The design for the I/O servers is shown in Fig. 3.1. The interfaces are provided by two threads, which register themselves with the name service as ioserver and omega0. For the omega0 server, the functionality is exactly as is described in [LH00]. The rest of the functionality concerning resource management inquiries and the PCI bus is provided by the ioserver thread.

Another activity provided by these servers is the timer, which with the help of the microkernel timer accessible from the kernel info page, makes time requests available through an information page which is inserted into each client during initialization and supports a DDE time base.

The I/O server is based additionally upon functionalities in the Common L4 Environment and thus also contains its local environment.

The first question to ask is which types of concurrency can exist in a device driver. Unfortunately, it is not possible to give a general answer to this question, as it really depends on the device class. Thus if a solution is to be created that can handle all possible requests of all possible drivers, it would have to be designed as a very heavy one thread solution (as in [Sta96, Meh96]).

One potential source of concurrency comes from all user inquiries at the process level. The driver must use barriers in this case, or only permit a single inquiry at a time. It is also possible for a device driver exports several interfaces, which could be accessed in parallel. An example are drivers for sound cards, which export interfaces for both the signal processor (PCM data) as well as for the mixer chip.

Apart from these activities, hardware interrupts occur as well as the associated deferred activities, which should also be regarded as potentially concurrent. The following pattern emerges:

One advantage of this design is the ability to assign each activity a separate thread priority. The interrupt thread can react immediately to interrupts and perform the necessary processing, in order to achieve a small latency. Deferred activities have a slightly lower priority and can be interrupted by the interrupt servicing thread. Similarly, interface threads have the lowest priority and are interruptible by aeither the interrupt servincing thread or the deffered activity thread.

This emulates the behavior in the Linux kernel rather exactly in that a process activity leaves the kernel only after completing processing of all pending functions.

The disadvantage is the increased number of L4 threads compared with the earlier solutions, but this additional expenditure should not be too large. Additionally, Linux devices drivers inherently support concurrency, which will be interesting in light of the SMP Fiasco system.

If one examines which kernel functions are used by different device drivers, it will be noticed that drivers of the same class tend to use the same functions. A portion of these functions is used by all device drivers. From this, it is possible to divide the kernel functionality into three groups.

This partitioning of functionalities allows for a simplified configuration with regards to the respective driver classes. Additionally, performance gains can be achieved by optimizing call paths.

Various functions are used by Linux device drivers for allocating and releasing

memory with varying degrees of granularity.

|

As shown in Tab. 3.2, pages allocated using vmalloc() can be freely distributed in the physical memory region, as long as they are sequential in the virtual memory region. The virtual memory region can not be used for DMA due to this characteristic.

Kernel memory, however, can be used for DMA (direct memory access) transfers. The remainging three groups allocate memory which is sequential in the physical address area and are aligned on page boundaries. Furthermore, a fast way to translate virtual addresses into physical addresses is needed since peripheral devices cannot deal with virtual addresses.

I will not go into detail about slabs which provide specialized functionality which is rarely used. Slabs are described precisely in [Bon94] as this: slabs implement an object cache, and are an optimization for cache allocation. 3.3 .

Using the interface to memory management taken from Linux, the DDE determines the best way to fulfill each request.

|

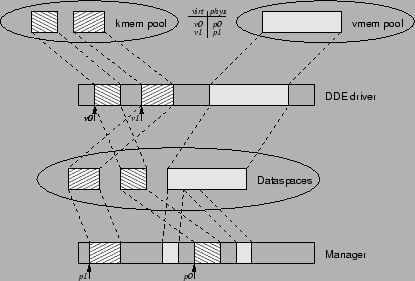

Based on [L+99], a request is made to the Manager 3.4 shown in Fig. 3.2 to construct the grey blocks. Afterwards the entire vmem pool consists of one Dataspace, whose memory pages do not have to be sequential.Pages in the Dataspace are inserted as needed, and can be removed later if necessary.

The solution in the DDE is shown in Fig. 3.2 (left side, hatched blocks). The kernel memory pool consists of several Dataspaces, which can be requested as necessary and added to the pool. Here each individual Dataspace fulfills the requirements of kmem chunks: pinned, sequential and known address mapping.

This solution is advantageous, as the initial allocation of a large, physically contiguous memory region - if at the point of starting time at all possible - is very static. If only a small region is allocated initially and new dataspaces are requested on demand, the "waste" of these special memory pages is reduced. Hereby it has to be considered that each driver server needs these areas and the consequence of this would be a multiple waste.

The physical start address is obtained at dataspace request from the manager and administered locally, so that during an address conversion no IPC becomes necessary.

Memory allocations in page granularity (get_pages()) are handled

similarly. Thus an allocation is mapped to the request of a suitable dataspace or

the freeing to the release. For these areas the address mapping

![]() has to be kept too.

has to be kept too.

Linux spin LOCKS and semaphores are illustrated on the implementations of the L4 Environment -- L4-Semaphore and L4-Locks. The locking from INTERRUPTS for synchronisation is naturally not suitable for the DDE.

Here the beginning is out [ HHW98 ] many more interesting, with that those cli()/sti() Pairs on an implementation with barriers to be illustrated. That interrupt_lock becomes with a call of cli() requested and sti() again approved. Thus that, behaves barriers of Unterbrechungen`` like a global Mutex and emulates very well the original environment.

That interrupt_lock is here driver local, i.e. in each driver server such a barrier exists. That is possible, since all internal messages, with which interruptions must to be really switched off, by the Omega0-Teil of the I / O Servers become totally enclosed and only the synchronisation function must be emulated.

With the design of the resuming synchronisation and Scheduling mechanisms must be differentiated again to the two levels with respect to the device driver:

There is however a simpler possibility, if one regards the places in the sources of program, at those from the device drivers schedule() one calls. Here two applications result: Once by the activity the processor is released ( yield ) and blocked on the other hand for any reason. The difference consists of the fact that in the first case process conditions are not changed 3.5 . A call of schedule() only one processor release should have here as a consequence -- l4_yield() .

How described in the paragraph 2,5,2 (flow control), the second case in addition, can arise and the activity process conditions modify, in order to block. Deblocking is possible only if the process context were linked before into a WAIT queue.

Here itself the Thread, must sleeps legen`` to the expected event occurred, thus another Thread, aufweckt``. This behavior can be achieved best with a context-local, binary semaphore, which is inititalisiert with 0. So can over the task structure in schedule() the semaphore requested and with wake_up() on the WAIT queues to be released.

Here a disadvantage shows up in our environment: The task structure about 1700 byte and each process context is assigned enclosure an instance. On the other hand in the DDE only few items of the structure are really needed -- it becomes thus memory, verschwendet``. Unfortunately is clearing up of the task_struct Types very much complex, if at all feasible, since many functions, which serve as shortcuts, would have to be addressed certain fields of the structure and also modified.

The modifications would constantly continue and the adjustment more difficult to new Kernel versions would make. Here must be looked up still for a suitable solution.

Deferred of activities can be knocked against as described by the ISR. The handling executes a dedicated Thread, which either pending functions fulfilled (calls functions) or at a semaphore blocks.

All functions, which were centralized in the I/O server, must be called now over IPC Stubs. That concerns the above interrupt handling exactly the same as the resource management and the PCI accesses. Since with the two latter components the Linux interface was taken over to a large extent, with the function call easily the suitable I/O interface function is called.

The interrupt handling was already described in the preceding paragraph.

As special request of Linux device drivers one knows timer and possibly also that / proc File system regard, whereby the latter does not have to be emulated mandatory (empties function trunks). A genuine emulation is not complex particularly and makes (at least with some device drivers) interesting information or configuration options available.

Timers within Linux are so-called one shot timers , i.e. at the indicated point in time (in jiffy ticks) the transferred function is once executed . The interface consists of three functions, which operate on the timer list: add_timer() , del_timer() and mod_timer() to modify (around a timer, if this is not already processed).

The timers can be executed by a further Thread, which waits for the next in each case timer point in time and calls afterwards the function. This Thread must be still added the initially sketched model (see fig. 3,3 ).

The driver-specific components of the local environments can be and require by very different scope an exact analysis of the appropriate device drivers and subsystems. Therefore I do not give here a generally accepted design separate describe exemplarily one sound Environment.

Sound drivers under Linux export char interfaces for the digital-analog converter, the mixer chip and the MIDI component. How many interfaces are offered, depends on the device driver and the actual device, since e.g. also several DSPs can be for signal transformation on the plug-in card.

The Linux sound interface is compatible to the open sound system , a platformunabhaengigen interface to audio devices.

A central component -- sound Kernel -- takes over a co-ordination role and all audio device drivers are attainable under the same major NUMBER. Now if a D/A transducer is to be opened, those becomes open Routine of the central component called and those, wahre`` interface as return value supplied. That sound Kernel represents sound the subsystem .

The actual device drivers register themselves with the

co-ordinator over a defined interface, i.e. they register the

interfaces, which they would like to export, e.g..

register_sound_dsp()

for a D/A A/D transducer.

The figure on DROPS is thus intuitive and original faithful: sound Kernel Thread (co-ordinator) and a Thread for each interface of the registered device driver. As possible optimization offers itself to save the co-ordinator Thread in which each interface Thread is registered with the central name service and turns potential clients directly to the Threads.

Here is the question, how the name service under D ROPS will look in the future and whether all information central to be administered to be supposed. Since over it no information exists and a later adjustment means small expenditure, I decided for the co-ordinator.

For DROPS - each Thread should export components an IPC interface leaned against the OSS (possibly under use of the DSI [ LHR01 ]).

In Linux 2,4 two types of sound drivers exist. Once is that sucked. native drivers, which belong directly to the Linuxkern. Besides there is still the OSS/Free package, which is contained a section that commercially refugee open sound system device driver and maintained by its author.

The OSS/Free drivers are also constituent of the standard Linuxquellen, presuppose however another environment. Therefore a software layer between the actual exists sound Kernel and the drivers, which sets on the one hand the environment to the order and on the other hand a figure to the native sound subsystem is.

Thus, if one liked to use device drivers from OSS/Free in the DDE, not only these, but also their subsystem Wrapper must be merged into the driver server.

The structure driver treiber-Servers illustrates fig. 3.3 . (as with the I/O server) the local Common L4 Environment constituent is each Servers also here.

In summary architecture represented in fig. 3,4 results. The only system component, which runs in the privileged processor mode with own address area, is the micro Kernel.

Over it a layer is situated executed by run time components as user mode servers, which support the Common L4 Environment [ Reu01 ] and so that form the minimum basis for applications under L4/Fiasco or D ROPS. Constituent of this layer is the I / O server apart from components e.g. a memory server, which puts physical memory in the form of DATA spaces [ to L$^+$99 ] at the disposal.

Above this run time Layers are settled, e.g. system near components file systems, device drivers or the network log stack. The device driver represented in fig. 3,4 consists as described in paragraph 3,3 of three sections and runs as independent servers on the micro Kernel. It can take place here also a fusion with other components (see 2.1 : Colocation), in order to increase the performance.

In the following section I will be received on the conversion by programming of the design ideas and the solution thereby emerging problems.

This section describes the implementation phase for the two

DDE components --

I / O server [ Hel01a ] and Linux libraries

[ Hel01b ]. For this reason I divided and begin it

into two large paragraph with the remarks to the I / O server.

**time-out** apart from the the following assertion be present the the definition the of the interface the of the I / O of Servers (IDL) in appendix A before.

As sketched in paragraph 3,2, server a module exists for the administration of system resources in the I / O. For all three resources -- I/O port and - memory as well as ISA of DMA (direct memory access) channels -- one interface command each is intended to the request and release.

Here each resources are only once assigned and all further request up to the release by the current owner to fail. This specification is supported by the acceptance that e.g.. MEMORY Mapped I / O of areas initially only the I / O server is available. This can insert these areas -- memory pages -- a client on request with the help of the mechanisms of the micro Kernel. This can access now optionally. One uses a similar mechanism for areas in the I/O port address area 4.1 .

In the case of the central administration of ISA DMA (direct memory access) channels further problems result, and I deal here only briefly with it, there the DMA (direct memory access) CONTROLLERS with few exceptions, e.g. floppy ones, in modern systems -- although available -- is not no more used and usually different solutions, like Programmed I / O , to exist.

The DMA (direct memory access) CONTROLLER in PC systems can be used in different modes, whereby only the CASCADE mode without Reprogrammierung of the CONTROLLER can be used after each transfer. Since DMA (direct memory access) channels are programmed over port, a similar problem results as in the case of the programming of INTERRUPT-CONTROL-LEARNS here (see paragraph 4,1,3 ).

In order to implement a safe programming of the DMA (direct

memory access) CONTROLLER, this would have in

I / O server take place and over a suitable interface usable

its. There however frequent Reprogrammierung, with floppy ones e.g.

max. after 512 transferred bytes, as fast as possible to be should

not, in order not to neutralize the advantages of a DMA (direct memory

access) transfer, seems this solution suitably.

An exception is here the CASCADE mode (also ISA bus mast ring called). With this mode the device takes over the programming of the CONTROLLER if it the ISA bus occupied. The DMA (direct memory access) CONTROLLER does not have to be switched from PCU PAGE only initially into this mode and a further, explicit synchronisation of port accesses is necessary, since this hides the hardware.

The Linuxkern contains a PCI subsystem from two sections -- a architecture-dependent and a general, platform-spreading. Since also in the version 2.4 of the Kernel a unique defined Scnittstelle to this are existed and placed only few request against the Kernel environment, one can extract and in a narrow emulation environment use the subsystem easily from the Kernel.

This characteristic takes advantage of the I / O servers and uses an internal library consisting of unmodified Linuxquellen of the subsystem and the necessary emulation environment for accesses to the PCI bus and administration of the attached devices.

The range of the emulation is limited here to simple Speicherallokation and - release as well as procedures for the call of the I / O resource management.

The Kernel-internal interface of the PCI Subssystem was taken over for the I / O server 1:1, and all functions, which Linux supports, are thus exported. Further a data type for a PCI device was defined after the original model, which makes all relevant information available.

The part of the I / O Servers, which is responsible for the interrupt handling and CONTROLLER programming, is based upon the realizations and the reference implementation after [ LH00 ].

Constituent Servers is thus a further internal library from easily 4,2 modified sources of the omega0 Servers and a minimum emulation environment. The I / O server offers thereby the Omega0-Schnittstelle and can the independent original server completely transparency replace.

Those place the local emulation environment for Linuxgeraetetreiber dde_test Libraries for the order. Result of this work are the general functions in dde_test common and the sound subsystem in dde_test sound . In the following I will describe the implementation of these components.

For the implementation of the two memory pools the LMM 4,3 library from the OS kit was used, which permits it to administer pools with one or more regions.

In the case vmem of the pool thus initially a region is created and associated with a requested DATA space for primary storages. The figure address area region - DATA space becomes after [ L$^+$99 ] from a dedicated instance -- region map by -- within the L4-Task executed. The DATA space can contain Luecken`` at each point in time,, i.e. side errors with the access in addresses within the vmem region are permitted and of region map by are resolved.

The kmem pool contains one region, whose DATA space however gepinnten and, contains physically sequential memory at the beginning also only. If the LMM library determines with a Allokation that sufficient memory is not in the pool, becomes one sucked. moreKernel() Function called. This can insert now new areas into the pool. In our case a new region with assoziertem DATA space is created and supplied to the pool.

A new region must be created, so that the LMM does not allokiert due to virtually sequential addresses of areas, which overlap DATA space boundaries. Then physically contiguous the characteristic would be not in every case guaranteed.

Beside that kmem LMM pool still another table is led, which administers for each DATA space start address a figure of the virtual in the physical address. The physical addresses of the gepinnten storage areas can be inquired at the DATA space manager, which is done also when creating the new LMM region.

The Linux functions virt_to_phys() and phys_to_virt() operate now with this table.

The semantics of the Scheduling functions was adapted to the sketched pattern.

|

Those schedule() Function has now the structure represented in fig. 4,1. A corresponding wake_up() calls l4_semaphore_up(& process > dde_sem) on a WAIT queue item up and the process context deblocks.

Except this standard version still one exists schedule_timeout(to) Function, after max. to Time steps returns. The implementation is based on the normal Scheduling and a timer, after to a modified wake_up() calls.

The interrupt handling is executed in Linux by the ISR, by means of request_irq(irq, isr) one registers. In the DDE thereupon a Thread knocked against, which turns to a Omega0-Instanz and requests feed of interruption events.

|

With the arrival of an event the ISR becomes ( IRQ_handler ) called. The ISR Thread is represented in fig. 4,2.

The implementation for deferred activities is similar. At the beginning a Thread is knocked against also here, which blocks however immediately at a semaphore, which was initialized with 0.

|

If the ISR pushes now a Bottom helped on, the Thread deblocks and treats all pending functions. Afterwards it puts to sleep again and the whole begins itself from the front.

Here the DDE supports so far only two Tasklet priorities , it none, echte`` softirq semantics is thus executed. That is however for device drivers in the Linux 2,4 sufficiently 4.4 .

The two priorities become by the paths HI_SOFTIRQ and TASKLET_SOFTIRQ represented, whereby first in tasklet_hi_action() for highly priorisierte deferred activities , e.g.. old style BHs, to be executed and the latters in tasklet_action() for normally priorisierte.

All necessary functions, those the I/O server supplies become through calls of IO IPC Stubs implements. Here the types specified in front (paragraph 4,1 ) in Linuxinterne are to be converted.

The One Shot timer is executed, as described in the design by a separate Thread. If no functions are to be fulfilled, the Thread (IPC receipt operation) sleeps, used up thus no CCU time. The timer lists were taken over easily changed from Linux (see [ Hel01b ] lib/src/common/time.c ).

Those sound Components so far not yet completely separated by the drivers and also the interface Threads to exist so far not yet. From the design is however easy to derive, as the library can be implemented.

Thus a sound subsystem must be put to the device drivers at the disposal that those sound Kernel Interface implements, but the necessary interface Threads produces. The head Thread to the point of starting time is that sound Kernel Thread. This calls the initialization routine of the device driver and announces themselves thereafter at the name service as a sound co-ordinator.

During the initialization now necessary Threads is produced,

e.g. produces

register_sound_dsp()

Call a Thread for a DSP device (fig. 3,3 ).

user applications can now over a OSS - a similar

interface to the device turn. Those hides

common

Library the synchronisation of the Threads.

As test equipment a Soundblaster 128 PCI sound card was used, which was based on the es1371 chip record. The corresponding Linux driver is es1371.c . I become here on a special feature, which concerning drivers, to be received.

This concerns the synchronisation of process contexts over queues. As I described in paragraph 2,5,2, in addition kernel functions exist ( sleep_on() ) their structure in fig. 4,4 is represented.

|

The process context modifies its status in blocked and joins the WAIT queue . Becomes subsequently, schedule() called, in order to initiate and on the event, which represents the queue, wait the blocking. After waking up the context departs independently from the WAIT queue .

The es1371 driver uses this function however not, but forms the behavior independently after (fig. 4,5 )., Prophylaktisch`` it at first the queue is waited and at the end of the function again removed. A blocking is initiated only if a certain event did not occur.

|

If the emulation would expect here that WAIT queue synchronisation is executed only over the discussed interface, the driver would not be executable. There however in the DDE those schedule() Function, leads also this more general case is copied to a functioning device driver.

This special feature proves the fact that an emulation can be regarded only as conditionally generally accepted, since is not to be foreseen, which special situations to occur to be able or how much fantasy some programmers to have. An exact analysis of the Kernel and also in a random sampling way the device driver should minimize this weakness.

Since the implementation was not yet completely concluded, I can give estimations here only for the performance of the device drivers within the DDE.

A factor, which influences the performance -- more exact the latency --, is the beginning of a Omega0-Servers. Since in micro Kernels interruption events are set by IPC, delays up to acknowledgement are larger in these systems than with monolithic beginnings. The Omega0-Server intensifies this, to which it introduces still another indirect ion level between source of INTERRUPT and driver. Thus two IPC messages up to the driver server are necessary for each INTERRUPT.

The synchronisation in the DDE is based on the standard LOCK and semaphore implementations. These use atomic operations and in the case of blocking IPC. Thus at least blocking and waking up are more complex than in Linux -- it requires two IPC messages.

Further an additional delay is to be expected, if the Kernel memory pool is completely occupied. Here must of that moreKernel() Function new DATA spaces to be requested and to the pool supplied. This process contains several IPCs within the L4-Task (region map by) and outward (DATA space manager). To reduce one knows these effects, in which one executes an analysis of the storage requirement Geraetertreiber Geraetertreiber-Servers and which size initials of the pool adapts according to the results.

Altogether the factor IPC is very important in micro-Kernel-based systems. Since the L4 or Fiasco Mikrokern makes a fast IPC available, it is to be expected that the influence of architecture on the performance is not very large.

In the context of this thesis (diploma) an environment for Linux device drivers under DROPS was sketched. The way was described by the exact analysis of the original environment up to the implementation of the run time components, in order to record a generalized process for the development of such environments. As case example device driver for sound cards under Linux served.

A power measurement showed up however due to the incomplete implementation was not executed in the course of the work that the Portierung is feasible. I could only from a pre-working conclude here that the performance losses will be situated in a reasonable framework.

For the future of this project primarily the function, the implementation places itself to completes and on other device classes to extend. Afterwards a performance analysis can take place, in order to check the acceptance specified in the last paragraph.

Another aspect for the environment would be it, not only device driver to portieren, but also other components to extract and use under D ROPS e.g. the TCP/IP stack from the Linuxkern.

It is still interesting last, which effects or advantages a functioning SMP Fiasco system has on the developed environment, because here multi threaded the concept should disburse itself.

This CAN found into the IO package [ Hel01a ] ( io/idl/io.idl ).

/ * * CORBA IDL based function INTERFACES for L4 of system *

/ modules l4 { / * * L4 IO server INTERFACE * / INTERFACE IO { / * *

Miscellaneous services * /

/ * * register new IO client * / long register_client(in l4_io_drv_t

type);

/ * * unregister IO client * / long unregister_client();

/ * * initiate mapping OF IO info. PAGE * / long map_info(out fpage

info.);

/ * * resource Allocation * /

/ * * registers for exclusive use OF IO port region * / long

request_region(in unsigned long ADDR, in unsigned long len);

/ * * the releases IO port region * / long release_region(in unsigned

long ADDR, in unsigned long len);

/ * * registers for exclusive use OF `IO MEMORY ' region * /...

/ * * PCI services * /

/ * * search for PCI DEVICE by vendor/device id * / long

pci_find_device(in unsigned short vendor_id, in unsigned short

device_id, in l4_io_pdev_t start_at, out l4_io_pci_dev_t pci_dev);

/ * * READ configuration BYTE of register OF PCI DEVICE * / long

pci_read_config_byte(in l4_io_pdev_t pdev, in long offset, out octet

val);

/ * * configuration BYTE of register OF PCI DEVICE write * / long

pci_write_config_byte(in l4_io_pdev_t pdev, in long offset, in octet

val); }; };

This CAN found into the omega0 package ( l4/omega0/client.h ).

/ * attach to irq LINE * / externally int on omega0_attach(omega0_irqdesc_t desc); / * detach from irq LINE * / externally int on omega0_detach(omega0_irqdesc_t desc); / * request for certain act ion * / externally int omega0_request(int concerns, omega0_request_t action);

This CAN found into the IO [ Hel01a ] ( l4/io/types.h ) and omega0 packages ( l4/omega0/client.h ).

/ * lîo type * /

typedef unsigned long lîo_pdev_t;

typedef struct { unsigned long devfn; / * encoded DEVICE &

function index * / unsigned short vendor; unsigned DEVICE short;

unsigned short sub_vendor; unsigned sub_device short; unsigned long

class; / * 3 byte: (cousin, sub, prog if) * /

unsigned long irq; lîo_res_t res[12 ]; / * resource region used by

DEVICES: * 0-5 standard PCI region (cousin of addresses) * 6

expansion ROM * 7-10 unused for DEVICES * / char name[80 ]; char

slot_name[8 ];

lîo_pdev_t concern; / * concern for this DEVICES * / }

lîo_pci_dev_t;

/ * omega0 of type (look there...) * /

typedef {... } omega0_irqdesc_t struct;

typedef {... } omega0_request_t struct;